Introduction: Your Easy Guide To Time & Space Complexity

Ever written a piece of code that runs like a champ on your machine with 10 items, only to have it crash and burn in production when faced with 10,000? We’ve all been there. That painful moment is often a lesson in algorithmic efficiency, and the best way to learn it is by exploring Big O Notation Practical Examples.

If the term “Big O” sounds like intimidating computer science jargon, don’t worry. And no, we aren’t talking about that “Big O” either…get your mind out of the gutter! This guide is designed to help you learn Big O notation easily. We’ll break down the concepts into a straightforward time complexity analysis guide and get the mystery of space complexity explained, all with a human touch and production-ready code.

So, Why Bother with Big O Notation Anyway?

Imagine you and a friend are both asked to create a function to find a specific name in a contact list. Your function might be quick for a list of 20 names but slows to a crawl with 20,000. Your friend’s function might have a tiny bit more setup, but it handles 20,000 names almost as fast as it handles 20.

How do you measure that difference in a standardized way? That’s Big O.

Big O notation isn’t about measuring speed in exact milliseconds. Instead, it describes how the runtime (time complexity) or memory usage (space complexity) of an algorithm grows as the input size (n) gets larger. It’s a high-level analysis that predicts an algorithm’s scaling behavior in the worst-case scenario.

The Ultimate Guide to Big O Notation Practical Examples

Let’s dive into the most common Big O classifications you’ll see in the wild. We’ll use JavaScript for our code examples, but the principles are universal.

O(1) — Constant Time: The Gold Standard

An algorithm runs in constant time if its execution time is the same, regardless of the input size (n). It doesn’t get any more efficient than this.

Practical Example: Looking up an element in an array at a specific index.

JavaScript

/**

* Retrieves the element at a specific index in an array.

* @param {Array<any>} arr The input array.

* @param {number} index The index of the element to retrieve.

* @returns {any} The element at the given index.

*

* Time Complexity: O(1) - Constant Time

* Why: Accessing an element by its index in an array is a direct memory lookup.

* It takes the same amount of time whether the array has 10 or 10 million items.

*/

function getElementAtIndex(arr, index) {

return arr[index];

}

const numbers = [10, 20, 30, 40, 50];

console.log(getElementAtIndex(numbers, 2)); // Output: 30

Analogy: Grabbing your apartment key from your pocket. It doesn’t matter if your pocket also contains a wallet, phone, and lint—the time to find the key is always the same. 🔑

The only exception might be if you wrote that girl’s number down on a piece of paper late at night and suddenly are panicking because you can’t find it…then it always seems to take infinitely longer to locate…it’s got to be in there somewhere, doesn’t it?

O(log n) — Logarithmic Time: The Efficient Scaler

Logarithmic time is fantastic. As the input size (n) grows exponentially, the runtime grows only linearly. This usually happens when an algorithm can cut the problem size in half with each step.

Practical Example: Binary Search on a sorted array.

JavaScript

/**

* Finds the index of a target value in a sorted array using binary search.

* @param {Array<number>} sortedArr The sorted input array.

* @param {number} target The value to search for.

* @returns {number} The index of the target, or -1 if not found.

*

* Time Complexity: O(log n) - Logarithmic Time

* Why: With each comparison, we eliminate half of the remaining elements.

* This "divide and conquer" approach means the number of operations grows very

* slowly as the array size increases.

*/

function binarySearch(sortedArr, target) {

let left = 0;

let right = sortedArr.length - 1;

while (left <= right) {

const mid = Math.floor((left + right) / 2);

if (sortedArr[mid] === target) {

return mid; // Target found

} else if (sortedArr[mid] < target) {

left = mid + 1; // Search the right half

} else {

right = mid - 1; // Search the left half

}

}

return -1; // Target not found

}

const sortedNumbers = [1, 5, 12, 25, 30, 42, 55, 68, 79, 91];

console.log(binarySearch(sortedNumbers, 42)); // Output: 5

Analogy: Finding a name in a physical phone book. You open to the middle, decide which half your name is in, and repeat the process, halving the search area each time. 📖

O(n) — Linear Time: The Fair & Proportional

Linear time is the most common and intuitive complexity. The runtime grows in direct proportion to the input size (n). Double the input, and you roughly double the execution time.

Practical Example: Finding the maximum value in an unsorted array.

JavaScript

/**

* Finds the maximum value in an array of numbers.

* @param {Array<number>} arr The input array.

* @returns {number | null} The maximum value, or null if the array is empty.

*

* Time Complexity: O(n) - Linear Time

* Why: We have to inspect every single element (n) in the array once

* to be sure we've found the maximum value.

*/

function findMax(arr) {

if (arr.length === 0) {

return null;

}

let max = arr[0]; // Assume the first element is the max

for (let i = 1; i < arr.length; i++) {

if (arr[i] > max) {

max = arr[i];

}

}

return max;

}

console.log(findMax([10, 5, 32, 8, 45])); // Output: 45

Analogy: Reading every page of a book. A 200-page book will take you twice as long to read as a 100-page book.

O(n²) — Quadratic Time: The Danger Zone

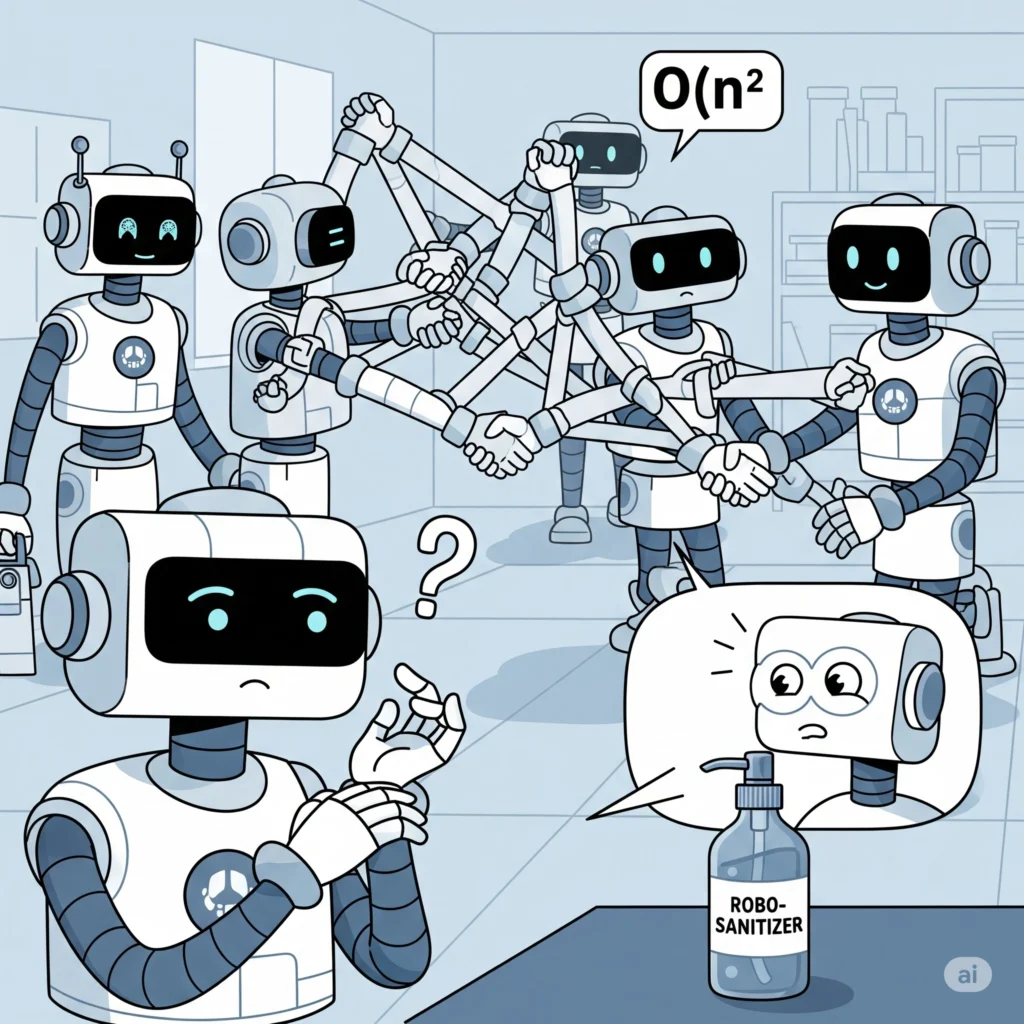

Quadratic time means the runtime grows by the square of the input size (n). If the input doubles, the runtime quadruples. This is a red flag for scalability and often involves nested loops over the same input.

Practical Example: Checking an array for duplicate values with a nested loop.

JavaScript

/**

* Checks if an array contains any duplicate values.

* @param {Array<any>} arr The input array.

* @returns {boolean} True if duplicates exist, false otherwise.

*

* Time Complexity: O(n²) - Quadratic Time

* Why: For each element (the outer loop, n), we iterate through almost

* the entire array again (the inner loop, n). This results in n * n

* operations, hence n².

*/

function hasDuplicates(arr) {

for (let i = 0; i < arr.length; i++) {

for (let j = 0; j < arr.length; j++) {

if (i !== j && arr[i] === arr[j]) {

return true; // Found a duplicate

}

}

}

return false;

}

console.log(hasDuplicates([1, 2, 3, 4, 2])); // Output: true

Analogy: A room full of people where everyone has to shake everyone else’s hand. As you add more people, the total number of handshakes increases dramatically. 🤝

And so does the number of times you have to break out your hand sanitizer…hopefully you saved a few extra travel size ones from back in COVID times!

Beyond Time: Your Practical Guide to Space Complexity Explained

Time isn’t the only resource we care about. Space complexity measures how much additional memory an algorithm needs to run, relative to its input size n.

- O(1) Space — Constant Space: The algorithm uses a fixed amount of extra memory, regardless of

n. - O(n) Space — Linear Space: The memory required grows proportionally with the input size

n.

Let’s see a practical example of the difference:

JavaScript

/**

* Calculates the sum of all numbers in an array.

* @param {Array<number>} arr The input array.

* @returns {number} The sum of the numbers.

*

* Space Complexity: O(1) - Constant Space

* Why: We only use a few fixed-size variables (sum, i). The memory

* required does not increase even if the input array has millions of items.

*/

function sumArray(arr) {

let sum = 0;

for (let i = 0; i < arr.length; i++) {

sum += arr[i];

}

return sum;

}

/**

* Creates a new array where each element is double the original.

* @param {Array<number>} arr The input array.

* @returns {Array<number>} A new array with doubled values.

*

* Space Complexity: O(n) - Linear Space

* Why: We create a brand new array ('doubledArr'). The size of this new

* array is directly proportional to the size of the input array (n).

*/

function doubleArray(arr) {

const doubledArr = [];

for (let i = 0; i < arr.length; i++) {

doubledArr.push(arr[i] * 2);

}

return doubledArr;

}

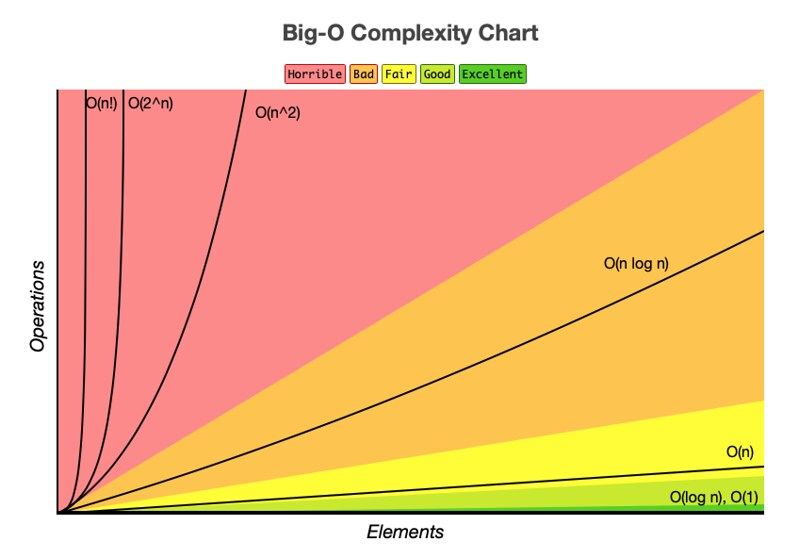

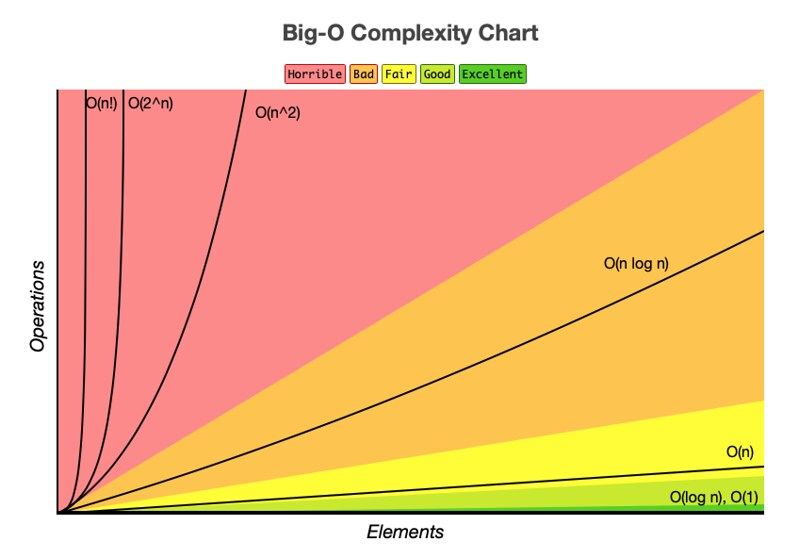

A Quick Big O Notation “Cheat Sheet”

To help you learn Big O notation easily, here’s a ranking from best (most scalable) to worst. For a great visual comparison of these growth rates, check out the Big-O Cheat Sheet website.

- O(1) – Constant (Excellent)

- O(log n) – Logarithmic (Excellent)

- O(n) – Linear (Good)

- O(n log n) – Linearithmic (Fair – e.g., efficient sorting algorithms like JavaScript’s built-in

Array.prototype.sort()) - O(n²) – Quadratic (Poor – avoid for large datasets)

- O(2ⁿ) – Exponential (Terrible – breaks on non-trivial input sizes)

Wrapping it Up – You’ve Got This!

Congratulations! By walking through these Big O notation practical examples, you’ve built a solid foundation. You’re no longer just writing code that works; you’re starting to think about writing code that scales.

This isn’t just theoretical nonsense—it’s a practical tool that helps you make better decisions, understand trade-offs between different algorithms, and prevent future performance disasters. The best way to get comfortable is to start analyzing the code you write and the libraries you use every day. Soon, it’ll become an indispensable part of your developer toolkit.