When building modern software, the ability to handle increasing loads and ensure high availability is paramount. You’ll inevitably find yourself needing to scale your applications to meet growing user demand, process more data, or simply ensure smooth performance optimization during peak times. This naturally leads to a fundamental question in system design: how do we scale effectively? The two primary approaches are horizontal vs vertical scaling, each with its own set of design strategies and implications. Understanding these differences is crucial for architecting robust and efficient distributed systems.

The Core Concepts: Understanding Horizontal vs Vertical Scaling

At its heart, the distinction lies in how you augment your system’s capacity.

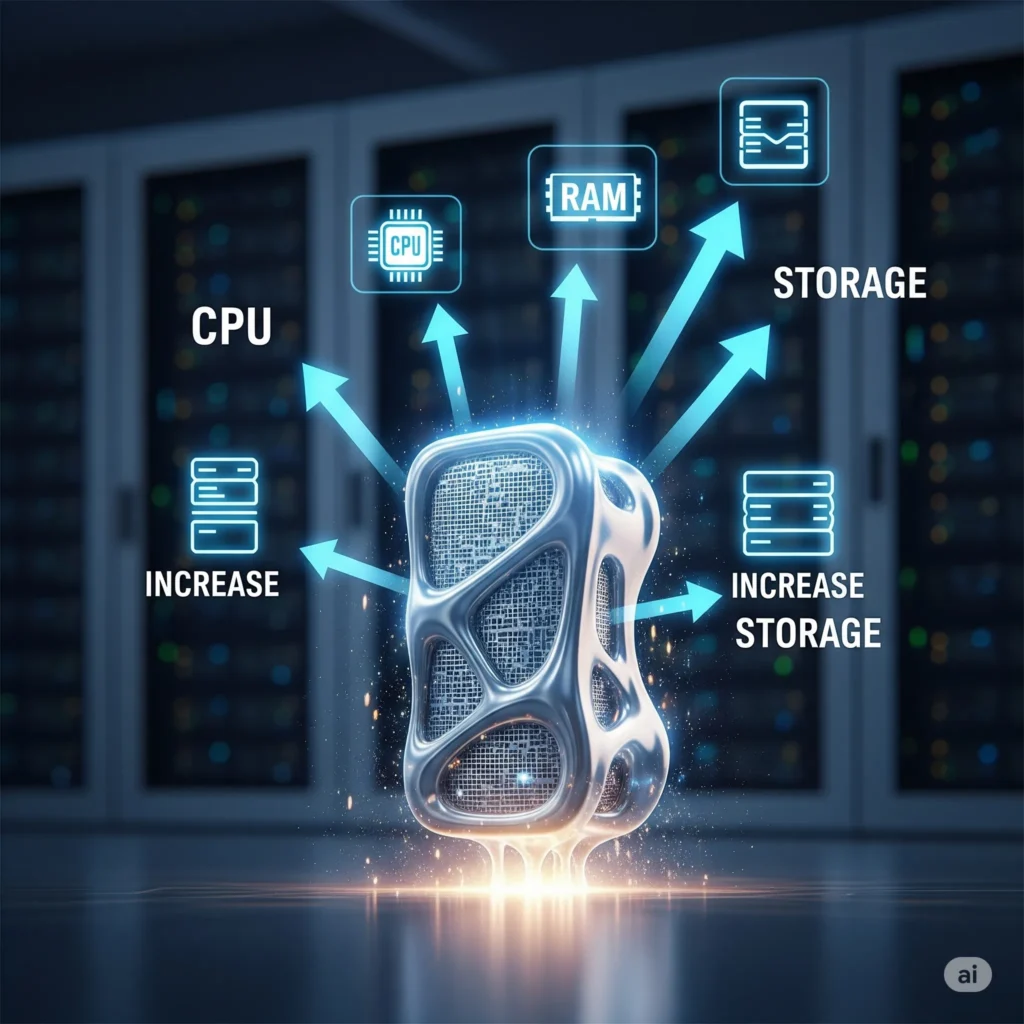

- Vertical Scaling (Scaling Up): This involves increasing the power of existing servers. Think of it as giving your current car a more powerful engine, more RAM, or a faster processor. You’re adding resources to a single machine.

- Horizontal Scaling (Scaling Out): This involves adding more machines to your existing infrastructure. This is like adding more cars to your fleet to handle more traffic. You’re distributing the load across multiple, often identical, servers.

While both aim to improve capacity, their implementation and suitability vary significantly. Let’s dive into the scalability patterns and design strategies associated with each.

Vertical Scaling: The Straightforward Approach

Vertical scaling is often the more straightforward option, especially in the early stages of development. It’s about strengthening your existing infrastructure.

Design Strategies for Vertical Scaling

1. Upgrade Hardware: The most direct method is to invest in more powerful hardware. This could mean:

- More CPU Cores: For handling more concurrent requests or complex computations.

- Increased RAM: To store more data in memory, reducing disk I/O.

- Faster Storage (SSDs): To improve read/write speeds for databases and file access.

- Better Network Interface Cards (NICs): For increased data transfer rates.

2. Optimize Software: Before reaching for new hardware, ensure your software is as efficient as possible. This includes:

- Code Profiling and Optimization: Identifying and fixing performance bottlenecks in your application code. For deeper insights, explore various Application Performance Monitoring (APM) tools.

- Database Tuning: Optimizing queries, indexing, and connection pooling. Learn more about effective SQL Query Optimization Techniques and Database Indexing Strategies.

- Caching Mechanisms: Implementing in-memory caches (like Redis or Memcached) to reduce database load.

Pros and Cons of Vertical Scaling

Pros:

- Simplicity: Often easier to implement as it doesn’t require significant architectural changes. For database scaling techniques, it might simply mean upgrading the database server.

- Existing Infrastructure: Leverages your current setup.

Cons:

- Single Point of Failure: If the upgraded server goes down, your entire application is affected.

- Costly Limits: There are physical and financial limits to how much you can scale a single machine. High-end servers can be extremely expensive.

- Downtime for Upgrades: Hardware upgrades often require downtime.

When to Consider Vertical Scaling

- Small to Medium Loads: When your current infrastructure can handle projected growth with a moderate increase in resources.

- Early Development Stages: As a quick way to improve performance without major re-architecture.

- Specific Bottlenecks: When a particular component (e.g., CPU-bound application logic) is clearly the bottleneck and can be addressed with more powerful hardware.

Horizontal Scaling: The Distributed Powerhouse

Horizontal scaling is about building a resilient and massively scalable system by distributing the load across multiple instances. This is often the preferred approach for applications aiming for high availability and massive user bases.

Design Strategies for Horizontal Scaling

1. Load Balancing: This is the cornerstone of horizontal scaling. A load balancer sits in front of your application servers and distributes incoming traffic among them. This could be:

- Hardware Load Balancers: Dedicated physical devices.

- Software Load Balancers: Applications like Nginx

or HAProxy, or cloud-managed services such as AWS Elastic Load Balancing (ELB) and Azure Load Balancer.

# This is the main Nginx configuration file or an included file.

# The 'events' block is required and typically defines how worker connections are handled.

events {

worker_connections 1024; # Increase if you expect a lot of concurrent connections

}

# The 'http' block is where all your web server configurations go.

http {

# Define the upstream group for your application servers

upstream myapp {

server app_server_1:8080;

server app_server_2:8080;

server app_server_3:8080;

}

# Define a server block to listen for incoming requests

server {

listen 80; # Listen on port 80 for HTTP requests

server_name yourdomain.com; # Replace with your actual domain name

# Define how requests to the root path (/) are handled

location / {

proxy_pass http://myapp; # Proxy requests to the 'myapp' upstream group

# Set important headers for proper request forwarding

proxy_set_header Host $host; # Passes the original Host header to the backend

proxy_set_header X-Real-IP $remote_addr; # Passes the client's real IP address

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; # Appends client IP to X-Forwarded-For

proxy_set_header X-Forwarded-Proto $scheme; # Passes the protocol (http/https)

}

# You can add other locations or configurations here, e.g., for static files, SSL, etc.

}

# You can define more server blocks for other domains or subdomains here

# server {

# listen 80;

# server_name anotherdomain.com;

# location / {

# proxy_pass http://another_upstream_group;

# }

# }

}This Nginx configuration defines an upstream block named myapp that lists the backend application servers. The proxy_pass directive then directs incoming requests to these servers in a round-robin fashion by default.

2. Stateless Applications: For effective horizontal scaling, applications ideally should be stateless. This means that any request can be handled by any server instance, as no user-specific data is stored on the server itself. User sessions and state should be managed externally (e.g., in a distributed cache or database).

3. Database Sharding and Replication: Scaling a database is often a significant challenge. Horizontal scaling for databases involves:

- Replication: Creating read-only copies of your database. Database Replication Strategies allow read traffic to be directed to replicas, significantly reducing load on the primary write instance.

- Sharding: No, we are not referring to something you do in your pants after tackling a grande burrito and dealing with Montezuma’s Revenge. We are talking about partitioning your data across multiple database servers based on a shard key (e.g., user ID, geographical location). Each shard can then be managed and scaled independently.

# Conceptual example for database sharding logic

def get_shard_id(user_id):

"""

Determines which shard a user's data belongs to.

This is a simplified example; real-world sharding logic can be complex.

"""

num_shards = 10 # Assume 10 shards

shard_id = user_id % num_shards

return shard_id

# Example usage:

user_id = 12345

shard = get_shard_id(user_id)

print(f"User {user_id} data belongs to shard {shard}")This Python function illustrates a basic sharding strategy using the modulo operator. In a real application, you’d use this logic to determine which database server to query or write to.*

4. Microservices Architecture: Breaking down a monolithic application into smaller, independent microservices

allows each service to be scaled horizontally based on its specific needs. If your recommendation engine is experiencing high load, you can scale just that service, not the entire application. Learn more about Microservices vs. Monoliths to understand the architectural trade-offs.

5. Containerization and Orchestration: Technologies like Docker and Kubernetes simplify the deployment, management, and scaling of distributed applications, making horizontal scaling much more manageable. If you are looking for a little bit more of a deeper understanding of how these work, check out our Kubernetes Pods Deployments & Services Explained blog post.

Further Reading

To truly master the art of scaling and system design, explore these related topics: