Demystifying AI Decisions

You’re stepping into the dynamic world of IT, whether fresh out of college or a bootcamp, and you’re probably hearing a lot about Artificial Intelligence (AI). From automating tasks to making complex predictions, AI is everywhere. But here’s a secret: for all its power, AI can sometimes feel like a “black box.” It gives you an answer, but doesn’t always tell you why or how it got there. That’s where Explainable AI for business users, or XAI, comes in.

Think of XAI as the translator between sophisticated AI models and the everyday business decisions they influence. It’s not just for the data scientists in the lab; it’s increasingly crucial for everyone, especially those of you who will be working alongside these systems in business roles. Understanding why an AI made a certain recommendation isn’t just interesting – it’s vital for trust, effective decision-making, and even career growth.

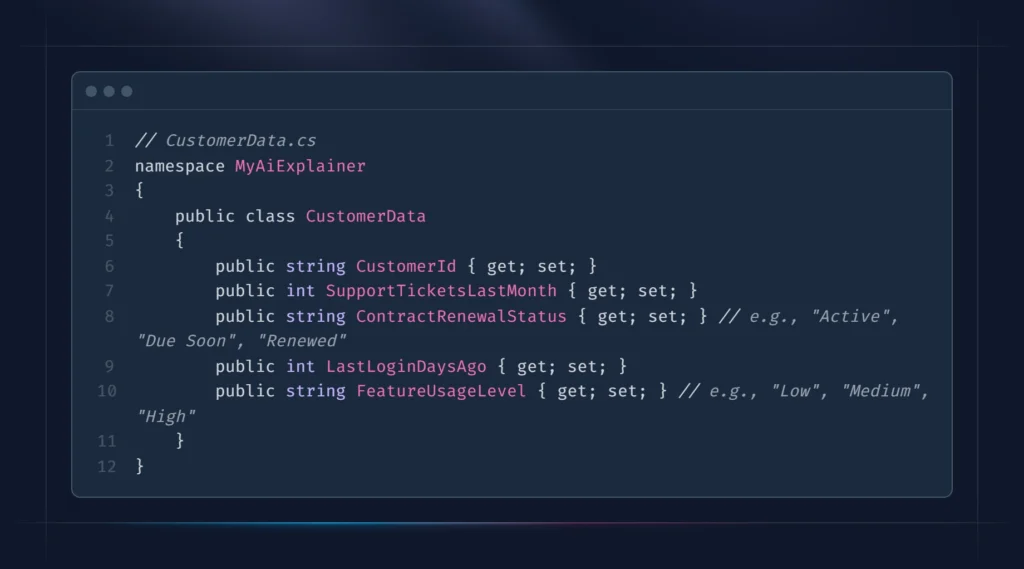

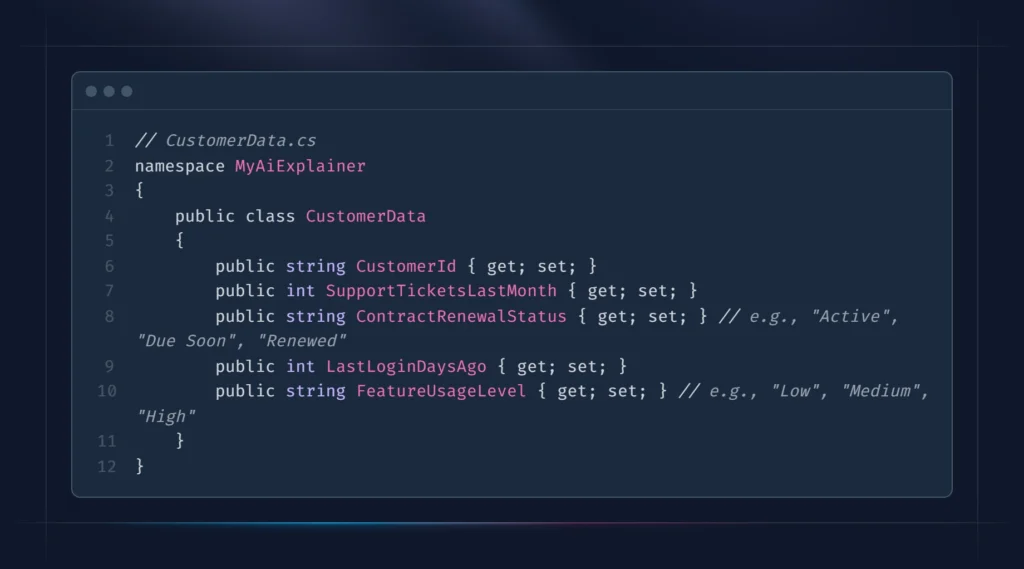

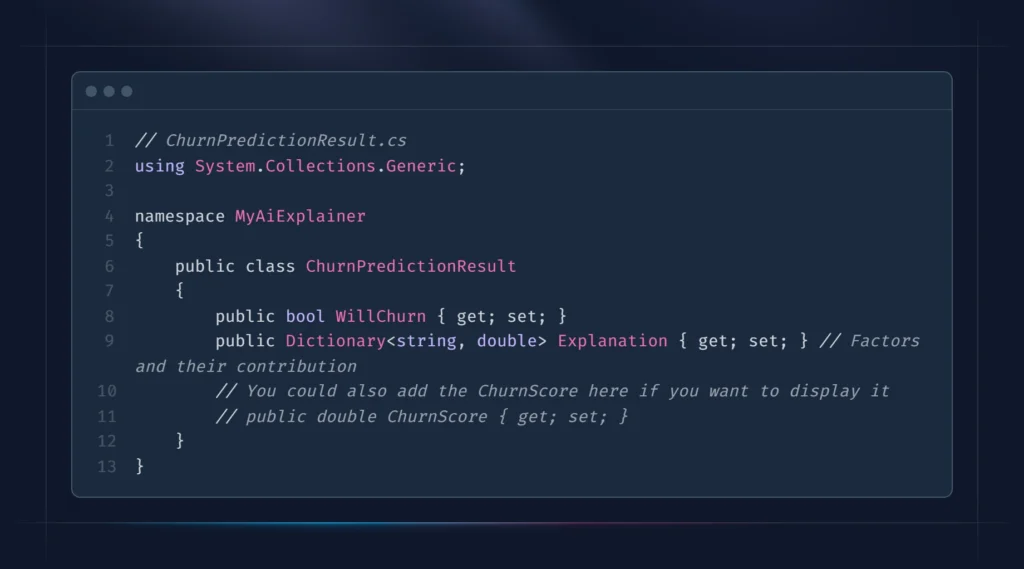

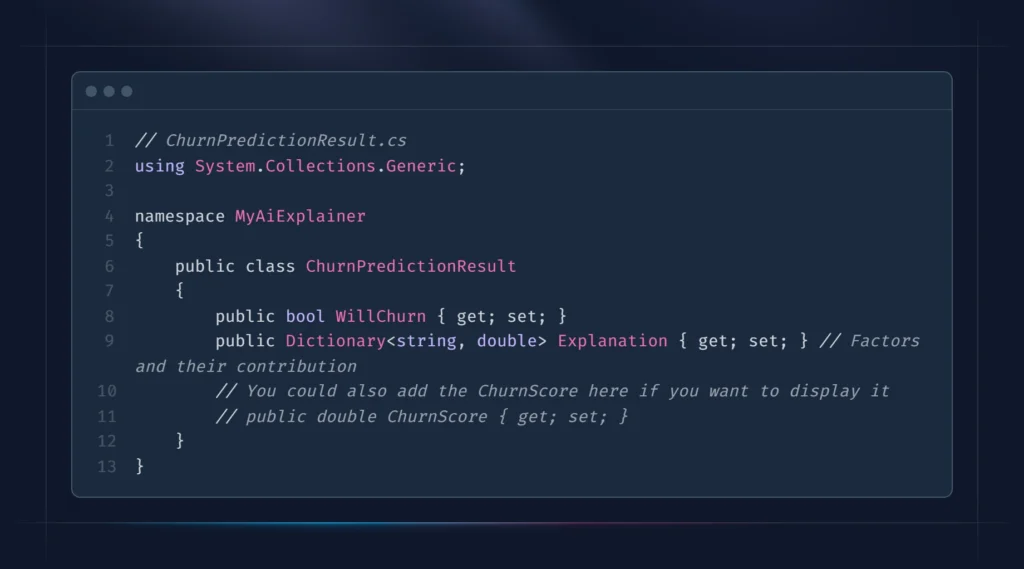

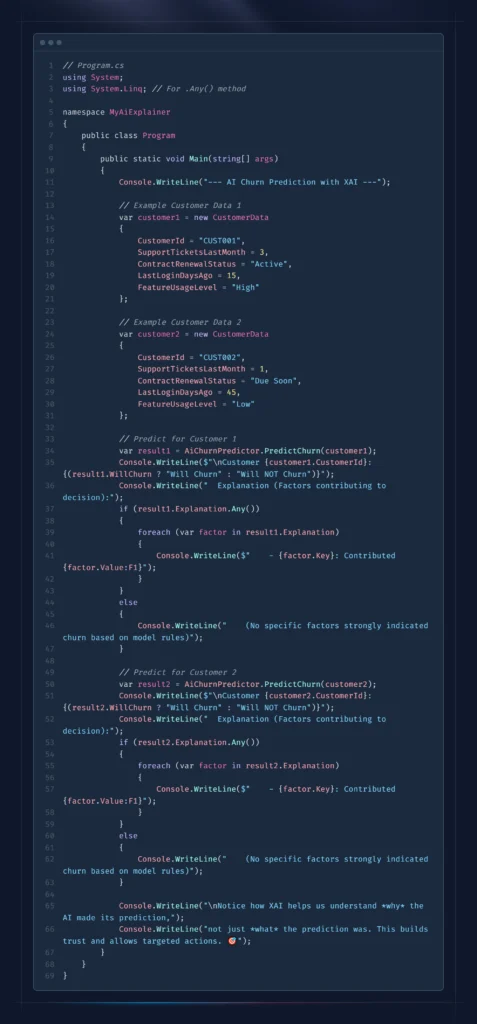

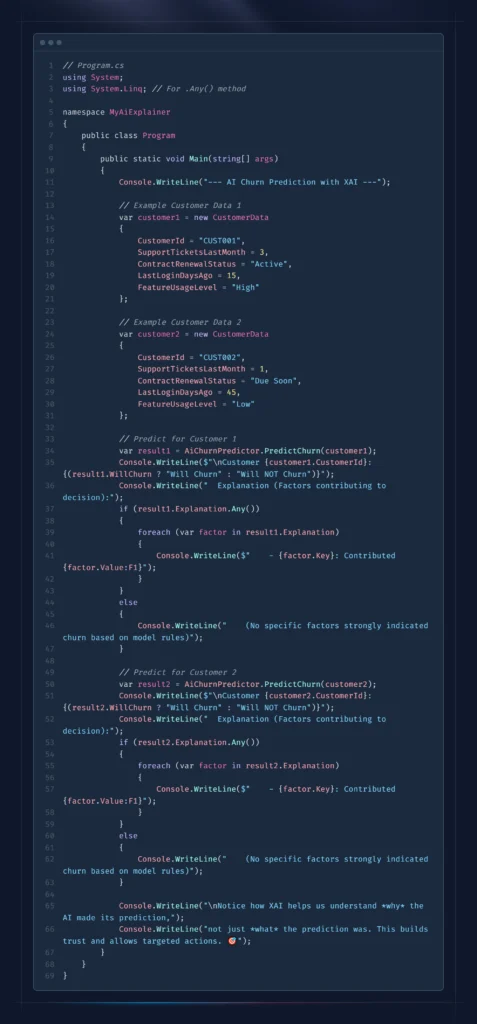

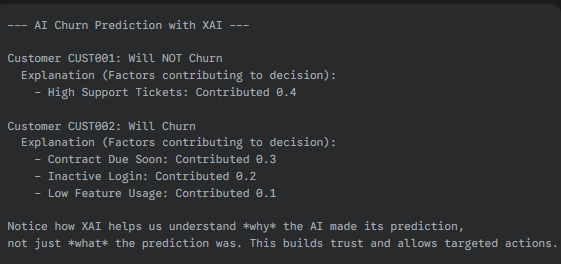

To illustrate, let’s consider a simplified scenario: an AI model that predicts whether a customer will churn (cancel their service). Without XAI, the AI might just say, “This customer will churn.” With XAI, it can tell you why.

After running this, a user might expect to see the following output in the console log:

Code Sample can be found here

Building Trust: The Imperative of AI Transparency

In many real-world scenarios, “getting the right answer” isn’t enough. Imagine an AI model recommending which loan applications to approve, or flagging a customer for potential fraud. If that AI can’t explain its reasoning, how can a business user – or even a customer – trust its decisions?

This is where AI transparency for executives and business users becomes non-negotiable. Without an understanding of the underlying logic, businesses face several risks:

- Trust Deficit: If you can’t explain why a customer was denied a service, or why a marketing campaign targeted a specific group, trust ergodic – both internally and with your customers.

- Bias Detection: AI models learn from data. If that data contains historical biases (which it often does), the AI will perpetuate them. XAI helps demystify AI decisions by revealing if an AI is making unfair or discriminatory choices, allowing teams to address them head-on.

- Regulatory Compliance: Increasingly, industries like finance and healthcare have strict regulations (like GDPR in Europe) that require explanations for automated decisions affecting individuals. XAI provides the necessary tools to meet these requirements.

- Improved Performance: When you understand why an AI model made a mistake, you can improve it. Explainable AI for business users isn’t just about explanations; it’s about making AI systems better and more robust.

Real-World Impact: How XAI Boosts Business Outcomes

For those of you looking to make an impact in your first IT role, understanding the interpretable AI business benefits is a huge advantage. It’s not just about technical prowess; it’s about being able to bridge the gap between technology and business outcomes.

Here are a few ways XAI translates into real business value:

- Better Strategic Planning: When leaders understand the drivers behind AI predictions (e.g., “our sales are projected to grow because of these specific market trends identified by the AI”), they can make more informed strategic decisions.

- Enhanced Customer Experience: Imagine a customer service chatbot that can not only answer a query but also explain why a particular policy applies to them. This level of clarity builds stronger customer relationships.

- Risk Mitigation: By understanding how AI models assess risk, businesses can proactively identify potential issues, whether it’s in fraud detection or operational efficiency.

- Faster AI Adoption: When non-technical stakeholders, from sales to HR, can understand and trust AI, they are more likely to embrace it and champion its use across the organization.

Bridging the Gap: Explaining AI to Non-Technical Teams

You might find yourself in a position where you need to explain AI models to colleagues who don’t have a technical background. This is where your understanding of XAI techniques will shine. It’s less about the complex algorithms and more about the “why” and the “what it means.” For XAI for non technical audiences, simplification is key.

Here are a few tips, drawing on the spirit of XAI:

- Start with the Problem: Frame the AI solution around a business problem everyone understands. “Our AI helps predict customer churn because losing customers costs us money.”

- Use Analogies: Complex concepts can be made simple. “Think of our recommendation engine like a super-smart personal shopper who remembers everything you’ve ever liked and disliked.”

- Focus on Inputs and Outputs: What data goes in, and what decision or prediction comes out? Keep it high-level.

- Visualize: Use simple charts, graphs, or even flowcharts to show the decision path. Avoid code snippets or overly dense diagrams.

- Explain the “Why”: If an AI flags something, can you show which specific factors most influenced that decision? Tools exist that highlight key features impacting a prediction, making it easier to answer the “why.”

- Highlight Impact: Always bring it back to the business benefit. How did this AI model save money, improve efficiency, or make a process fairer?

As you begin your career, remember that technical skills are just one piece of the puzzle. The ability to communicate complex ideas, build trust, and ensure the ethical deployment of technology like AI will make you an invaluable asset. Understanding explainable AI for business users will not only give you a significant edge but also empower you to be a more effective bridge between technology and business goals.

Further Reading:

- For more on the strategic importance of XAI: IBM’s take on Explainable AI

- A deeper dive into the how-to of explaining AI: Google Cloud on AI Models